What is a ‘Sophisticated Bot Attack’?

At Netacea we talk about protecting our customers from sophisticated attacks carried out by bots. But what does this actually mean? How do you know you’ve got a problem with sophisticated bot attacks?

We go into a detailed explanation below but it’s worth remembering that there is a human adversary behind all automated attacks. Although somewhat autonomous once programmed, bots do not attack a target without human intervention. The idea, after all, is for threat actors to achieve a scale that cannot be reached by humans alone.

It is this need for scalability along with the increasing sophistication of defensive solutions that drive the evolution of attacks and attack tools. Because bots do not act alone, there is a need to adopt technology capable of introducing multiple different configurations of bot (a scripted automated attack) as the detection of any one specific configuration enables the creation of heuristics that put all copies of that bot at risk.

What is an Attack?

An attack is malicious activity carried out by a single human adversary or group against a target’s web estate. As defined using the BLADE Framework, an ‘attack’ is made up of six key phases, from inception through execution to profiting. It’s possible an attack could be carried out end-to-end in a matter of minutes, while a sustained attack could also take place over days or even months. The execution of an attack may also take place in a number of waves, with previous phases looping back on themselves in response to defensive measures employed.

So, when we talk about an attack, we mean the entirety of the attack lifecycle, while colloquially, being ‘under attack’ means the attack execution phase specifically.

What Do We Mean by Sophisticated?

A sophisticated automated attack is defined as such by the tactics, techniques, procedures and tools used, often in combination, rather than the sophistication of the bot software exclusively. Separately we do have a framework that looks at the four generations of bot management and considers the evolution of bots themselves, but that will be a future article.

In terms of attack sophistication, at one end of the spectrum, we consider a DDoS (Distributed Denial of Service) attack as an example of a ‘dumb’ or unsophisticated automated attack – obvious, noisy, undynamic – its purpose is to consume resources until a service or application becomes unavailable to legitimate users. DoS attacks are inelegant in that they try to achieve their aim by saturating resources available to the target, rotating in new IP addresses as existing sources of traffic are blocked by defensive tools.

At the other end of the spectrum are automated attacks that layer complex techniques to obfuscate their origin in with automated tools that are programmed to behave so much like humans they are (almost) indistinguishable as such. The intent of these attacks is to remain undetected for as long as possible and keep the window of opportunity for profit open, but still to operate at scale. This is where Netacea focuses its efforts as a highly specialist detection solution.

The spectrum in between these two opposites is defined by how well the human adversary knows how to use the tools available to them. Essentially, less capable adversaries can use complex tooling to create unsophisticated attacks easily detectable by bot management solutions. While adversaries with a richer imagination can use the same tools to design an attack that is harder to detect and/or mitigate requiring specialist tools and insight to defend against.

Like most professional criminal activity, sophistication is also driven by the size of the prize. If the ROI opportunity is good enough, organized criminal gangs will invest in resources and find ways to make the attack more sophisticated to increase its chance of success.

Attack Signals We Analyze

Sophisticated bots attempt to hide within legitimate visitor traffic and in some cases web traffic levels will be spiking, because the attack execution coincides with an ‘event’ like a ticket release or product launch. This makes it harder to look for obvious anomalies like a sudden increase in traffic.

When an attack is being executed every visitor is more likely to be a bot. There are a number of signals we use to determine whether a visitor is part of an attack or not, and we use both behavioral analytics and intent analytics to make this decision.

The approach we take in detection modelling also means monitoring all interactions between each visitor and the web server all the time, not just the first request, because some of the more sophisticated bots are scripted to behave like legitimate visitors and their true intent does not become apparent until later in the digital user journey. This makes behavioral analysis alone unreliable.

What is Intent Analytics?

Some of the attack signals we use to define malicious intent, include:

- Anomalies in length of time spent on site versus typical visitors

- Anomalies in construction of HTTP requests

- Abnormal number of User IDs associated with one IP (Netacea allocates a proprietary User ID to assign an ‘identity’ to each visitor without ingesting PII)

- Abnormal proportion of requests to a specific set of paths

- Abnormal navigation of paths

- Consistent failure to load all content on page

- Abnormal volume of requests versus typical visitors

- Rotation of cookies

- Abnormal patterns or format of headers

- Reputational information about the IP address

- Unusual locations for source traffic

The attack signals are analyzed in real time and combined with verified signals we have already detected from across the Netacea customer network to create attacker fingerprints. The size of our protected customer base means the same adversaries and botnets pop up time and time again, adding to our extensive library of fingerprints. The insights we gain from an attack on one customer can be used to the benefit of all other customers and get us to the point where we can confidently detect most malicious visitors before their first request is completed.

Putting it All Together

From an adversary’s point of view, you might think the obvious plan of attack here is to record a legitimate visitor traversing a site and replicate that behavior with an automated tool. But if that same exact visitor appeared on a website 1,000 times it would look suspicious, so threat actors need to configure their tools to appear to be 1,000 different visitors. This is where it gets challenging for an unsophisticated adversary.

The thing about humans is they tend not to be uniform. They’re chaotic and unpredictable and get distracted and change their minds a lot. All things that are very difficult to script and mimic by a machine which is following logical instructions.

If you changed only one property in that script, the IP address for example, the visitors would still look suspicious because the device identifier or user agent for the browser would still be identical. Let’s say you managed to change multiple properties – enough to appear to be different users on different devices – you then have 1,000 visitors following exactly the same path through your website in exactly the same time frame, which again looks suspicious.

We’ve seen it all: nonsense headers claiming to be IPhone versions or Chrome browser versions that don’t exist; multiple visitors from a single IP address rotating through many different cookies or header data; visitors traversing a website with inhuman speed; large volumes of visitors that follow a site’s seasonality perfectly but are out of sync with historical seasonality by a few hours; volumetric visits from India or Russia to a site that trades exclusively in North America, and more.

And that’s just the bots. Malicious humans behave differently to legitimate visitors as well. Because they’re repeating the same actions over and over again, they tend to develop a rhythm. They know the site too well. The navigate too quickly through paths or hit an endpoint without following a typical journey. They know that the next step in their journey requires them to click a button in the top right, so their cursor is already there, while an unfamiliar user would need to mentally process the page in front of them.

A sophisticated attack, in the lexicon of the anti-bot community, has a high degree of distribution – that is to say there are no patterns beyond those generated organically (such as seasonality) and few repeating properties across visitors.

CAPTCHA Farming: Attackers Going the Extra Mile

For the most part, adversaries are opportunistic. But in situations where the potential reward is worth additional investment, we see attacks incorporate tactics like residential proxies and CAPTCHA farms.

Residential proxies, or resi-proxy, are compromised devices or routers on consumers’ home networks. Malware creators infect devices to create botnets that can be rented out to other criminals. The reason residential IPs are attractive is because we typically see legitimate traffic coming from these addresses and retailers or other brands are reluctant to deploy anything in their defense that might cause friction to the user journey and derail a potential sale.

Netacea’s library of Known Attack Signals will tell us if we’ve seen malicious activity from such an address before and to treat this visitor with extra suspicion. We don’t want to be heavy-handed because while the device may be compromised, it might actually be in use by the unwitting owner at this point in time. So, while we have probable cause for malicious intent we would then look closely at the visitor behavior in real time to confirm or deny our suspicions.

Instead of hard blocking, a check would typically be used – the familiar ‘are you a robot’ CAPTCHA test. In this case, sophisticated and savvy criminals may employ a CAPTCHA farm – a team of real humans to solve these challenges. But because these humans are not using the actual device, these solving requests need to be taken out of line, solved, and the response injected back into the original request queue. This will raise some anomalies that we can detect and is a benefit of our approach of looking at all the server traffic all the time, and not just checking a visitor’s validity on their first request to a site.

How Do We Know We’ve Stopped a Sophisticated Attack?

We’ve described what adversaries do to try and obfuscate their attacks, so how do we know when we’ve been successful in detecting them?

In one example we knew from chatter on fraud forums that a scalping attack was planned against a fashion retailer for a high-demand product drop, and we were able to pre-empt most of the automated attack based on known botnets and techniques with hard blocks.

When the dynamic part of the automated attack executed and we needed to respond in real time to sources and techniques we weren’t already aware of, Netacea Bot Protection leapt into action with reactive detection models.

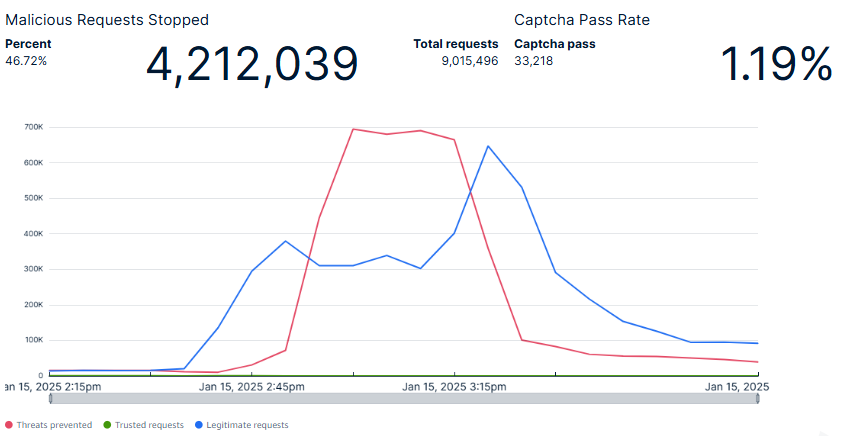

The very small green line is known trusted requests, the blue curve is legitimate requests, and the red spike is malicious requests detected.

The event was all over in about 60 minutes during which time Netacea’s Intent Analytics models were able to detect in real-time that 55% of the total traffic attempting to access the product drop was malicious and automated. About 4.2 million malicious requests were mitigated. Approximately 20% of this malicious traffic attempted to pass CAPTCHA and was automatically detected and blocked. The attack traffic was incredibly distributed, originating from a combination of residential proxies and hosting providers, and utilized up to date browser identifiers in an attempt to avoid detection. Regardless of these attempts, the product drop was successful from the retailer’s perspective, with no reports of false positives from the customer.

But the best feedback we get is from the fraudsters themselves. After the developers of the same bot used in the attack above made some proxy updates to their tool we responded with new detection models and watched the frustration build in the closed fraud forums we have access to.

When customers of the bot developers start complaining that the tools are getting blocked this undermines the credibility of the bot developers and jeopardizes their ability to keep selling their tools.

It’s also the best confirmation that Netacea is making a difference and making big brands and their customers safer.

Understand the full lifecycle of business logic attacks and see how there are several other phases that provide opportunity for disruption of an attack before the execution phase.